BaRT

Barrage of Random Transforms for Adversarially Robust Defense

Accepted for Oral Presentation at CVPR 2019

Edward Raff, Jared Sylvester,

Steven Forsyth, and Mark McLean

Links

We hope to make the code open source soon. In the meantime, some relevant portions are available in the appendix of our paper.

Abstract

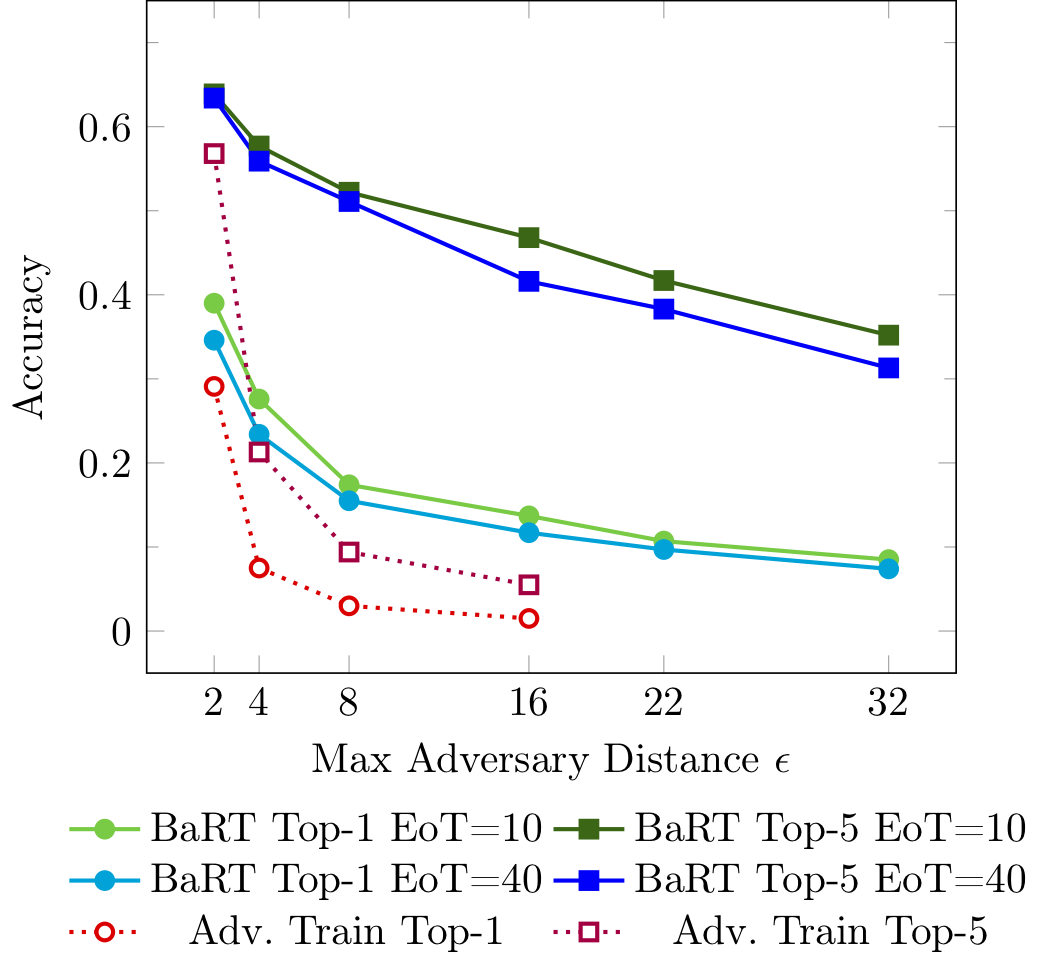

Defenses against adversarial examples are historically easy to defeat. The common understanding is that a combination of simple image transformations and other various defenses are insufficient to provide the necessary protection when the obfuscated gradient is taken into account. In this paper, we explore the idea of stochastically combining a large number of individually weak defenses into a single barrage of randomized transformations to build a strong defense against adversarial attacks. We show that, even after accounting for obfuscated gradients, the Barrage of Random Transforms (BaRT) is a resilient defense against even the most difficult attacks, such as PGD. BaRT achieves up to a 24x improvement in accuracy compared to previous work, and has even extended effectiveness to a previously untested maximum adversarial perturbation of ε=32.

Contact

- Edward Raff: raff_edward@bah.com

- Jared Sylvester: sylvester_jared@bah.com

- Steven Forsyth: sforsyth@nvidia.com

- Mark McLean: mrmclea@lps.umd.edu

Images

BaRT provides a stronger defense against this class of gradient-based attacks than the previous state of the art, Adversarial Training (Kurakin, Goodfellow & Bengio, 2017).

In fact, BaRT achieves a higher Top-1 accuracy on ImageNet than Adversarial Training's Top-5 accuracy when the adversarial distance allowed exceeds ε≥4, as measured in the ℓ∞ norm.

BaRT continues to provide defensive benefit up to an adversarial distance of ε=32.

Citation

@InProceedings{Raff_2019_CVPR,

author = {Raff, Edward and Sylvester, Jared and Forsyth, Steven and McLean, Mark},

title = {Barrage of Random Transforms for Adversarially Robust Defense},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2019}

}